3.1 Overview of Generative Models

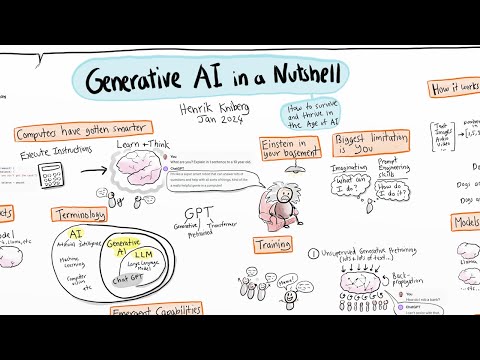

Generative AI models leverage probability and pattern recognition to create novel outputs, enabling systems to produce realistic text, images, audio, and more. The underlying principle is to learn the statistical patterns of the training data and then replicate or extend these patterns in new data samples. These models are not just "copying" but understanding the essence of the data to generate something plausible yet unique.

Unlike discriminative models, which categorize or label data, generative models focus on modeling the distribution of data itself, allowing for applications in creativity, simulation, and innovation. Common architectures include Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs). Additionally, transformer models like GPT have revolutionized text generation through sophisticated attention mechanisms.

3.2 History of Generative AI and Key Milestones

Generative AI has evolved through contributions from pioneering researchers:

- 2013 - Variational Autoencoders (VAEs): Introduced by Kingma and Welling, VAEs marked a leap in unsupervised learning by encoding data into a compressed latent space and decoding it back, forming the basis for more flexible and continuous data generation.

- 2014 - Generative Adversarial Networks (GANs): Ian Goodfellow's GANs established a competitive framework where two networks, generator and discriminator, improve each other's outputs, leading to breakthroughs in realistic image generation.

- 2018 - GPT and Transformer Models: With OpenAI's GPT series, the transformer architecture, based on self-attention, emerged as a leading technique in natural language generation, enabling coherent and contextually aware text generation.

- 2020-2023 - Diffusion Models: Diffusion models, used in tools like Stable Diffusion, have recently gained traction for producing highly detailed, photorealistic images through a process that iteratively refines random noise into coherent images.

3.3 Mathematical Foundations and Key Concepts

A deep understanding of generative AI requires knowledge of the following mathematical foundations:

- Probability Distributions: Generative models are trained to learn data distributions, using distributions like Gaussian or Bernoulli to represent the likelihood of different outputs.

- Latent Space: In models like VAEs, data is compressed into a latent space, representing the underlying factors of variation. The model learns to generate realistic samples by exploring this space, guided by latent variables.

- Loss Functions: GANs optimize adversarial loss where the generator and discriminator losses guide each other's improvements. In VAEs, Kullback-Leibler divergence is used to ensure that the encoded representations resemble a target distribution, typically Gaussian.

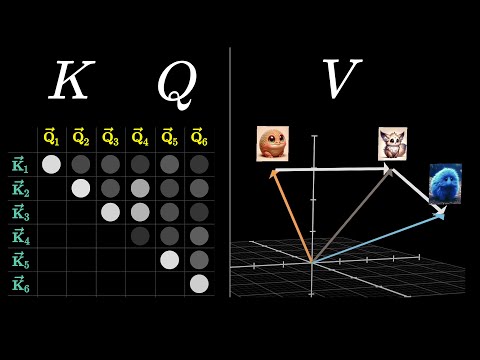

- Attention Mechanisms: Transformers use attention to focus on the most relevant parts of the input sequence, allowing models to maintain context over long passages, a critical advancement for text generation.

3.4 Generative Text Models

Transformers, particularly GPT-style models, use self-attention to assign importance to words in a sequence, creating fluent, coherent text. These models are pre-trained on massive datasets, enabling tasks such as translation, summarization, and dialogue generation.

- Architecture: Transformers employ multi-layered attention and dense network layers, learning word relationships and syntactic structures. This allows them to generate contextually accurate, sequential text by following patterns learned during training.

- Use Cases: These include chatbots, virtual assistants, automated journalism, summarization, and customer support. Models like OpenAI’s ChatGPT and Google’s T5 have been deployed in real-world applications.

- Advances: OpenAI Q* (O1) | Claude 3.5 Sonnet | Google Gemma | Grok-2

3.5 Generative AI for Code

Code generation models, such as OpenAI's Codex, assist developers by writing code, automating tasks, and debugging. They analyze syntax and patterns across large codebases, learning language semantics and program structures to generate functional code.

- Architecture: Based on transformers, these models adapt to coding languages by learning patterns in syntax, functions, and control structures. This allows them to generate code that aligns with logic and syntax.

- Use Cases: Automated coding in IDEs, error detection, code refactoring, and programming tutorials. Tools like GitHub Copilot facilitate development workflows by suggesting relevant code snippets.

- Advances: Claude Chat | Alibaba Qwen | OpenAI Codex

3.6 Generative Image Models

Image generation relies on GANs, VAEs, and diffusion models. GANs have been particularly successful due to the adversarial dynamic, while diffusion models excel in generating photorealistic visuals by refining noisy data.

- Architecture: GANs employ a generator-discriminator duo, where the generator's aim to fool the discriminator drives improvements. Diffusion models start from random noise, iteratively transforming it into coherent images.

- Use Cases: Art, design, and media creation. Tools like DALL-E and Midjourney use advanced models for generating custom visuals.

- Advances: Flux AI | DALL-E 3 | Google Imagen 3

3.7 Generative Video Models

Generative video models synthesize videos by managing temporal coherence, often relying on GANs, RNNs, and transformers adapted for video. These models enable applications in content creation and deepfakes.

- Architecture: Transformer-based video models or 3D convolutions handle spatiotemporal data, ensuring smooth frame transitions.

- Use Cases: Media, entertainment, and advertisements, with tools like Synthesia and D-ID offering video creation solutions.

- Advances:

- Google VideoPoet | OpenAI Sora

3.8 Why Generative AI Is Emerging Now

Advances in computational power, access to large datasets, and refined algorithms (e.g., transformers, diffusion models) have propelled generative AI to new heights, unlocking applications across diverse fields.

3.9 Ethics and Challenges in Generative AI

- Deepfakes: The potential misuse of deepfakes has raised concerns about privacy and misinformation.

- Bias: Bias in generated data reflects and amplifies societal issues, highlighting the need for careful dataset curation and bias mitigation.

- Environmental Impact: Generative models are resource-intensive, driving a push for sustainable AI practices.

3.10 Future Trends and Research Directions

- Multimodal Models: Unified models that handle text, image, and video data will expand cross-domain applications.

- Real-Time Generation: Advances in efficiency aim to bring real-time generative applications to mainstream technology.